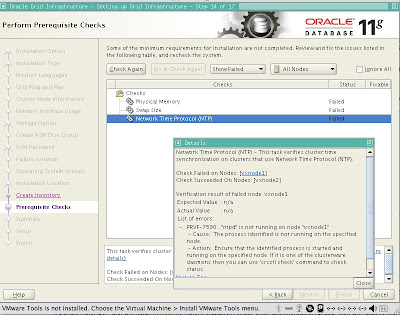

During the initial setup of the shared disks on my iSCSI SAN for the 11gR2 RAC cluster nodes,

the Oracle 11gR2 Grid Infrastructure complained that it could not access the LUNS for the iSCSI storage. To verify and resolve this issue, I had to run the discovery commands for iSCSI as shown below.

First, on the iSCSI SAN we need to check the status for the LUNS:

To do so, we issue the Linux iSCSI command

tgtadm --lld iscsi --op show --mode targetroot@san ~]# tgtadm --lld iscsi --op show --mode target

Target 1: iqm.mgmt.volumes-san

System information:

Driver: iscsi

State: ready

I_T nexus information:

I_T nexus: 2

Initiator: iqn.1994-05.com.redhat:7d8594f48e2a

Connection: 0

IP Address: 1.99.1.1

I_T nexus: 3

Initiator: iqn.1994-05.com.redhat:6cc883d783b6

Connection: 0

IP Address: 1.99.1.2

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: None

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk1.dat

LUN: 2

Type: disk

SCSI ID: IET 00010002

SCSI SN: beaf12

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk2.dat

LUN: 3

Type: disk

SCSI ID: IET 00010003

SCSI SN: beaf13

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk3.dat

LUN: 4

Type: disk

SCSI ID: IET 00010004

SCSI SN: beaf14

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk4.dat

LUN: 5

Type: disk

SCSI ID: IET 00010005

SCSI SN: beaf15

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk5.dat

LUN: 6

Type: disk

SCSI ID: IET 00010006

SCSI SN: beaf16

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk6.dat

LUN: 7

Type: disk

SCSI ID: IET 00010007

SCSI SN: beaf17

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk7.dat

LUN: 8

Type: disk

SCSI ID: IET 00010008

SCSI SN: beaf18

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk8.dat

LUN: 9

Type: disk

SCSI ID: IET 00010009

SCSI SN: beaf19

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk9.dat

LUN: 10

Type: disk

SCSI ID: IET 0001000a

SCSI SN: beaf110

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk10.dat

LUN: 11

Type: disk

SCSI ID: IET 0001000b

SCSI SN: beaf111

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk11.dat

LUN: 12

Type: disk

SCSI ID: IET 0001000c

SCSI SN: beaf112

Size: 2147 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /disks/disk12.dat

Account information:

ACL information:

ALL

[root@san ~]#

Good, so on my iSCSI SAN I can see the LUNs. Next step is to initiate discovery on the second RAC node that cannot see the shared disks.

[root@vxnode2 ~]# iscsiadm -m discovery -t sendtargets -p san.mgmt.example.com

1.99.1.254:3260,1 iqm.mgmt.volumes-san

You should see the status as shown above with details for the SAN. If not you need to run the rediscovery process and check to ensure that the iscsi services are running on the cluster node. Next verify that the disks are accessible and have the correct ownership and privileges granted:

[root@vxnode2 ~]# ls -l /dev/sd*

brw-r----- 1 root disk 8, 0 May 16 03:28 /dev/sda

brw-r----- 1 root disk 8, 1 May 16 03:29 /dev/sda1

brw-r----- 1 root disk 8, 2 May 16 03:28 /dev/sda2

brw-r----- 1 oracle oinstall 8, 16 May 16 03:30 /dev/sdb

brw-r----- 1 oracle oinstall 8, 32 May 16 03:30 /dev/sdc

brw-r----- 1 oracle oinstall 8, 48 May 16 03:30 /dev/sdd

brw-r----- 1 oracle oinstall 8, 64 May 16 03:30 /dev/sde

brw-r----- 1 oracle oinstall 8, 80 May 16 03:30 /dev/sdf

brw-r----- 1 oracle oinstall 8, 96 May 16 03:30 /dev/sdg

brw-r----- 1 oracle oinstall 8, 112 May 16 03:30 /dev/sdh

brw-r----- 1 oracle oinstall 8, 128 May 16 03:30 /dev/sdi

brw-r----- 1 oracle oinstall 8, 144 May 16 03:30 /dev/sdj

brw-r----- 1 oracle oinstall 8, 160 May 16 03:30 /dev/sdk

brw-r----- 1 oracle oinstall 8, 176 May 16 03:30 /dev/sdl

brw-r----- 1 oracle oinstall 8, 192 May 16 03:30 /dev/sdm

These should match for both cluster nodes.

Now we can kick off the installation for 11gR2 RAC as shown below.

Are you ready for a coffee break? I sure am! Ok so this will take a LONG time to run since I am using VMWare Fusion on my 4Gb Macbook Pro and it will run for half hour or so.

I will cover how to setup an iSCSI SAN for Oracle 11gR2 RAC in a future blog post.

My Oracle Support has a nice walk through guide on how to configure Open Filer iSCSI for Oracle RAC environments.

Using Openfiler iSCSI with an Oracle RAC database on Linux [ID 371434.1]